Whole-brain computation of cognitive versus acoustic errors in music: A mismatch negativity study

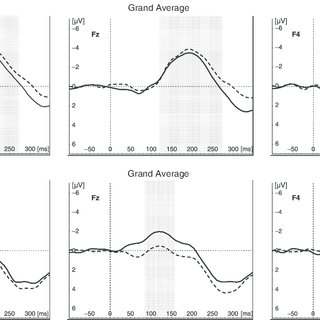

According to predictive coding theory, an audition is an active process where models of expectations for the incoming sounds are constantly updated based on expectations (also termed priors) when errors occur. Recent neuroimaging studies provide empirical support for this theory with electroencephalography (EEG) or magnetoencephalography (MEG) recordings of the mismatch negativity (MMN), which indexes early predictive errors of acoustic features (i.e., deviations from prior expectations within the first 120–250 ms from the onset of the deviant stimulation). These studies also substantiate the existence of ascending, forward connections in the auditory cortex that convey these prediction errors to higher-order brain areas, signaling the ‘new’ information in external stimuli, and of backward connections from higher-order areas of the auditory cortex to predict the activity of lower-order areas.

However, most studies that have measured the MMN do so for simple acoustic feature errors and analyze only the MEEG sensor signal and parameters: only a minority of MMN studies have provided a clear reconstruction of the neural sources. These studies returned a network of active brain areas that were mainly localized in the auditory cortex and especially in Heschl's gyrus and the superior and middle temporal gyri. Additional, weaker generators of the MMN were localized in the inferior frontal cortex and cingulate gyrus. Functional magnetic resonance imaging (fMRI) studies have further confirmed the involvement of the superior temporal gyrus and right inferior and middle frontal gyri in the generation of the MMN. Taken together, the current literature supports the hypothesis that the auditory cortex is the main generator of the MMN elicited in response to errors of acoustic priors, with frontal generators also involved – perhaps in the process of involuntary “attention switching” and prior updating.

Yet the predictive coding theory holds that forward and backward connections should also convey and predict the cognitive features of stimuli beyond their low-level (e.g., acoustic) features. Within this framework, music listening is a peculiar case, involving the prediction of both lower-level acoustic features using knowledge (priors) accumulated from life-long exposure to all kinds of sounds and, at the same time, music-specific priors based on exposure to a specific musical culture. Together, these predictions allow us to detect changes and mistakes (e.g., in tonality, harmony, transposition, or rhythm) that make music either interesting and pleasurable or, conversely, boring, and dissonant. However, the brain areas that generate these cognitive prediction errors, and whether they differ from those underlying the MMNs for low-level acoustic features (i.e., by recruiting more frontal sources) are, thus far, open research questions.

Despite the rich cognitive information contained in musical sequences, most musical MMN experiments (including the fMRI ones) have used simple auditory oddball paradigms in which the acoustic features inserted in sequences of coherent sounds (e.g., pitch, rhythm, location, timbre) are broken by sudden, infrequent deviant sounds. These studies have revealed the automatic predictive processes for sounds that rely on feedforward and backward projects from and to the auditory cortex, but they bear little resemblance to the variety of sounds and features encountered in music. Accordingly, the newer “multi-feature” paradigm introduces a deviation in a single feature into every second sound of a musical pattern, thus allowing for the recording of several prediction errors – including cognitive ones. In one version of this paradigm, six deviants were used (pitch, slide, duration, timbre, location, and intensity), obtaining reliable MMNs for each. Similarly, in the latest “MusMelo” version of this paradigm, six deviants are inserted in a loop of one elaborated musical melody, crucially including two distinct categories of deviants: cognitive or high-level deviants and acoustic or low-level ones. Cognitive deviants refer to changes in the melodic line (melodic contour) of the melody, altering the meaning of the music since they give rise to a varied version of the original melody. Conversely, acoustic deviants sound merely like “mistakes” during the musical performance without producing any actual change in the melodic line. Hence, the MusMelo paradigm offers a unique possibility of measuring the neural indexes of cognitive versus acoustic priors and their related prediction error signals and locating the subservient neural sources.

To summarize, much is known about the MMN prediction error signal and its neural substrate in the auditory cortex for acoustic features. However, research on the role of frontal MMN generators is still scarce and inhomogeneous. This is particularly true about music perception, which draws so heavily on both acoustic and cognitive features and is dependent on the fast, automatic predictive processes that are indexed by MMN. Related work has been conducted on the early right-anterior negativity (ERAN) which is typically elicited when participants attentively listen and detect chords violating the conventions of Western harmony, and which relies on the right homolog of inferno-frontal Broca's area and parietal regions. However, such attentive processes would not explain how we can very promptly grasp even abstract, culture-dependent violations of musical conventions without the need of focusing on listening and how an understanding of musical sounds automatically unfolds over time without any conscious effort.

In this study, we, therefore, wished to localize the automatic predictive processes throughout the whole brain that are responsible for the fast (120–250 ms from the deviant stimulation) generation of error signals during music listening and to determine whether these signals differ when the error is computed against acoustic versus cognitive priors. To this goal, we investigated in a large sample of over 100 participants the neural sources of acoustic versus cognitive errors of musical melodies, as indexed by MMN. We hypothesized to observe stronger frontal MMN generators for cognitive deviants, and increased responses in the auditory cortex to MMNs elicited by acoustic deviants.

We also assessed how the activity in MMN generators was modulated by musical expertise, for both acoustic and cognitive errors. The MMN has been repeatedly connected to cognitive abilities, musicianship, musical learning, and musical cognitive abilities. For instance, Putkinen and colleagues showed enhanced MMNs in children exposed to musical training, especially for melody modulation, mistuning, and timbre that was not existent before exposure to the musical training. Similarly, Kliuchko and colleagues discovered an overall stronger MMN to timbre, pitch, and slide for jazz compared to classical musicians, non-musicians, and amateurs. Tervaniemi and colleagues showed that MMNs were enhanced for tuning deviants in classical musicians, for timing deviants in classical and jazz musicians, and transposition deviant in jazz musicians. Moreover, fMRI evidence has consistently demonstrated how musical expertise refines cognitive priors, for instance, allowing musicians to notice subtle harmonic changes and their action-observation areas and higher-order frontal areas to become more activated during mere music listening. For all of these reasons, we hypothesized that musical expertise would increase both acoustic and cognitive MMNs at the sites of their generators.

Story Source:

Materials provided by Neuroimage: Reports. The original text of this story is licensed under a Creative Commons License. Note: Content may be edited for style and length.

Journal Reference: Science direct

0 Comments